If your CSV contains characters other than basic alpha numeric characters, you may run into encoding issues, which will cause upload failures.

Consider this text:

Cafe

It contains characters from the basic ASCII character set, and the text file can be encoded ASCII. Simple.

Now let's add some spice:

Café

Now it contains a character from the extended ISO 8859-1 character set, commonly known as Latin1, which extends upon the basic ASCII character set and includes all the characters needed for around 30 languages used throughout the Americas, Western Europe, Oceania, and much of Africa.

ISO 8859-1 extends the original ASCII character set, so they're designed to work together, but we need to account for the new characters, so we save the file encoded as Latin1 and we all get on with our lives.

Great, right?

Nope.

See, é might be from Latin1, but it might not. 😱

It's character code point is the number 233 in Latin1, and in Code page 1252, the primary Windows encoding, and even Code page 863 which was commonly used in Quebec. But, the same 233 character code point in ISO 8859-5 (aka Latin/Cyrillic) is the Cyrillic character щ.

Here's where the problems arise. Depending on who typed it, on whatever keyboard, in whatever language, using whatever system, it could be just about anything.

Any character can be in a plain text file like a CSV - there's no hard and fast rules, only an encoding header that tells the reader how to interpret the byte sequence to decode the characters. There is nothing stopping an ASCII encoded text file containing a Latin1 character, or an ISO 8859-5 character.

So, say we encounter a file with an ASCII file header that contains an 'out of range' character, there's two options:

- guess what encoding it should be and convert that character, or

- interpret the character based on the file header encoding

Problem 1: Guessing the encoding

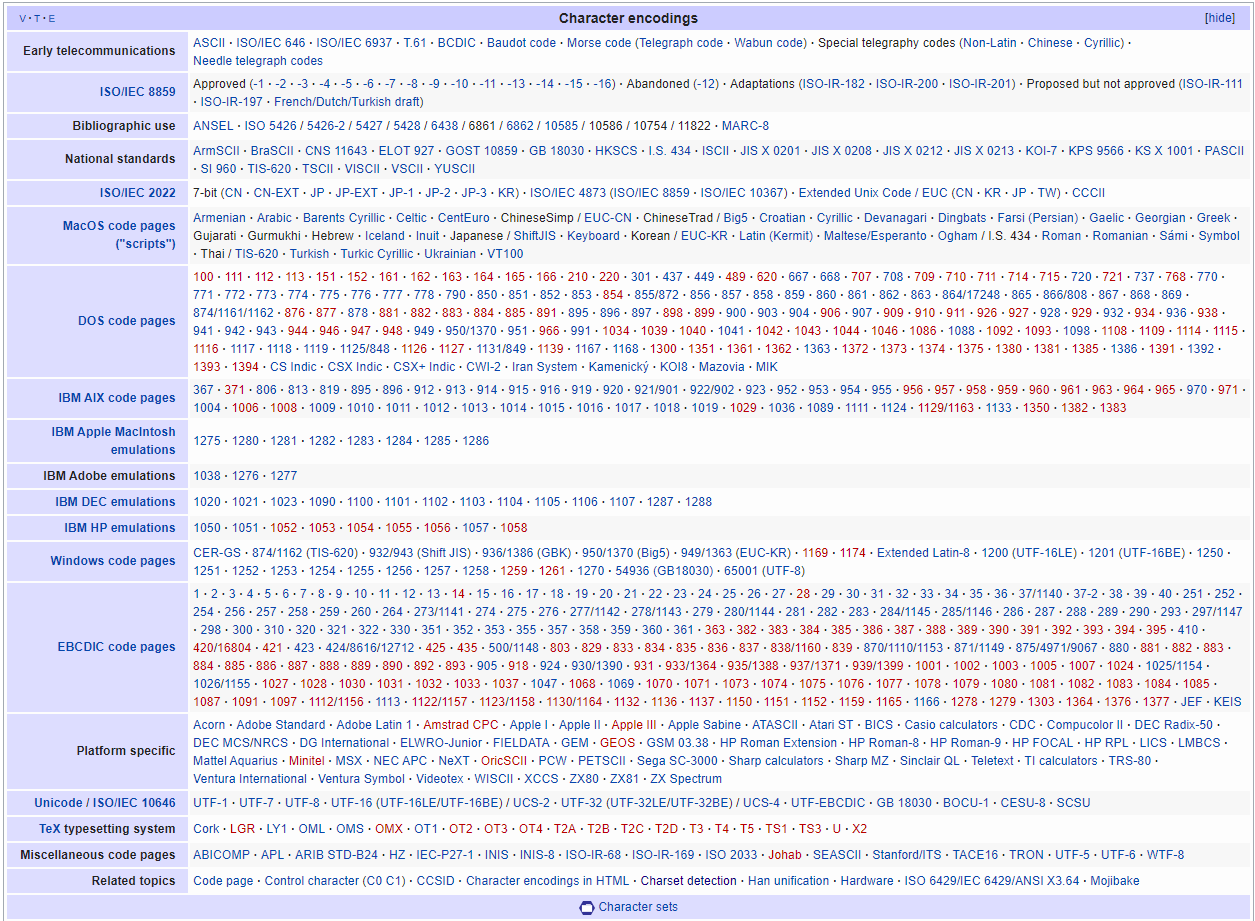

Here's a list of character encodings. Care to guess which one your random, out of range character belongs to?

As there are literally hundreds of encodings, and we have no way to tell from the byte sequence which character in which encoding this should be.

Instead of guessing, we will halt the import process.

So I hear you say, "but you guys are the experts, can't you determine the encoding of my file based on the contents?"

Yes and no. If encoding is defined in the header, we process as per the header. If it's not defined, we have to try to determine what it is.

There are methods that allow us to analyse the data and deduce the encoding based on the sampled data, and we do use them on every uploaded file. However, it's not possible to perform these analyses on every line of data that passes through the server. It has to be sampled, and an educated guess is made.

But its still a guess.

If the sampling missed that one single character that would mean the file is encoding X instead of encoding Y, then the guess is wrong, and the conversion of the characters is wrong, and you end up with Mojibake — a nonsense string of characters that makes no semantic sense. It usually looks something like this ã¯ã€ã‚³ãƒ³ãƒ”ューã‚, and leads us to Problem 2.

Problem 2: Interpreting the character in the file's reported encoding

If you interpret an out of range character that doesn't map to your file header's reported encoding, you can end up with Mojibake.

Café

or this

Caf�

or this

Caf◻

To maintain integrity of your data, we will not write your data to our servers in this form.

When we find a mismatch, the file is rejected and the upload fails.

So what's the solution?

Always work with UTF-8 encoding.

If your data comes from an older source in a different encoding, clean it, convert it, and maintain it in UTF-8. It may be painful in the short term, but it will pay off in the long run.

Not convinced? Dive deeper here.